| Series |

|---|

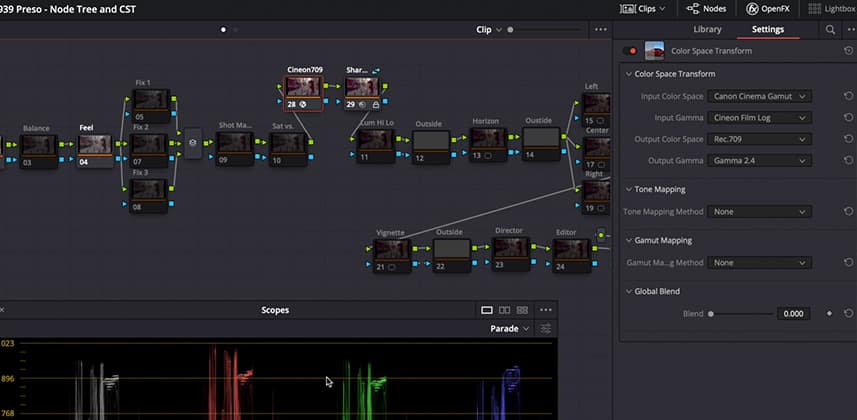

Integrating a ‘Color Mangled Workflow’ into fixed node trees: Following up on two Insights

In previous Insights, I got you up to speed on the evolution of my Fixed Node Tree as well as what I call the ‘Color Mangled Workflow‘ (which relies on Resolve’s Color Space Transform ResolveFX plugin). There are two follow-ups I want to share regarding those Insights, as my workflow has evolved and I want to pick up on an item I left open in the Color Mangled Workflow Insight.

The continuing evolution of my Fixed Node Tree

Based on great feedback in the comments to my previous Insight on this topic, in discussions with Team Mixing Light, and grading several shows with these fixed node trees – I’ve further optimized my Resolve node tree. Some of the changes are performance enhancements. Some of the changes are the result of how I’m actually using the node tree, in practice. I’m offering up these changes to give you an idea of how to think about your personal style and how you can (and should) be adapting your fixed trees to better reflect how you personally work – or how your team likes to work (if you collaborate with other colorists).

Digging further into the Color Mangled Workflow (with Resolve’s Color Space Transform plugin)

My thinking and experience on using Resolve’s Color Space Transform ResolveFX plugin has evolved quickly over the past month, as I’ve used it on several shows. After studying it more closely, coming to a greater understanding of the options it presents, and modifying my workflow across several different shows I’m really happy with the results. In this Insight you’ll learn:

- Where have I settled on placing the Color Space Transform plugin in my node tree?

- Why have I set up two fixed node trees for each camera in a show? (hint: tone mapping)

- Where did my inspiration for using Cineon in the CST plugin come from?

- How has the Stream Deck XL suddenly accelerated the speed of working with multiple fixed node trees, making this approach much more viable when the clock is ticking and you’re working against a deadline?

A few quick thoughts on moving back to the new New Mac Pro

This Insight is my first after moving back to the Mac Pro. I’ll offer a few quick thoughts (including, why now?) at the end of this Insight.

Comments? Questions? Ask below!

As always, we love member comments, questions, and observations. It’s how we all learn.

-pi

Member Content

Sorry... the rest of this content is for members only. You'll need to login or Join Now to continue (we hope you do!).

Need more information about our memberships? Click to learn more.

Membership optionsMember Login