Understanding These Key NAB 2018 Buzzwords

Artificial Intelligence, automation, Machine Learning, “algorithms”– these are becoming the latest buzz-words of our industry. NAB Show 2018 was all about AI and ML.

In this Insight, I explain the background and basics of these technologies, as a sort of cheat-sheet, for those of you who want to better understand what, why and how they are being implemented. This will be useful both professionally and personally, as these tools are becoming ubiquitous across every part of modern society.

This is only a primer, so I won’t comment on any specific products, and I won’t go too deep. There are many arguments, theories and implementations around these technologies worthy of further discussion; which will be part of my forthcoming book on how AI will affect the filmmaking industry.

Defining Artificial Intelligence

Artificial Intelligence (AI) is not new, but it’s recently become more powerful. Processing power has caught up to the theories and science, making them useful.

In 1914, the first AI was built. It was a simple algorithm, that allowed a machine to play chess. After WWII, computer scientists really started working on AI, writing programs that required less human input to perform calculations and therefore could be considered “intelligent”. In 1956, John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon coined the term “Artificial Intelligence” to describe this growing field of study. Artificial Intelligence is itself a loose term, describing any machine that has the capacity to take a set of instructions, perform a task and report back– more like a human than a calculator. Basically, any computer program (algorithm) that can solve problems and achieve goals can be called “weak AI”, or more recognizably, “automation”.

AI is used for things like search; image, speech and pattern recognition; game playing and classification (like looking at a set of numbers and determining whether it’s a valid credit card or a random scammer. Ever noticed how a website knows what kind of card you have after the first few numbers?). You’re most commonly interacting with AI in targeted advertising, facial recognition, assisted driving, and search tools.

AI is not adaptable

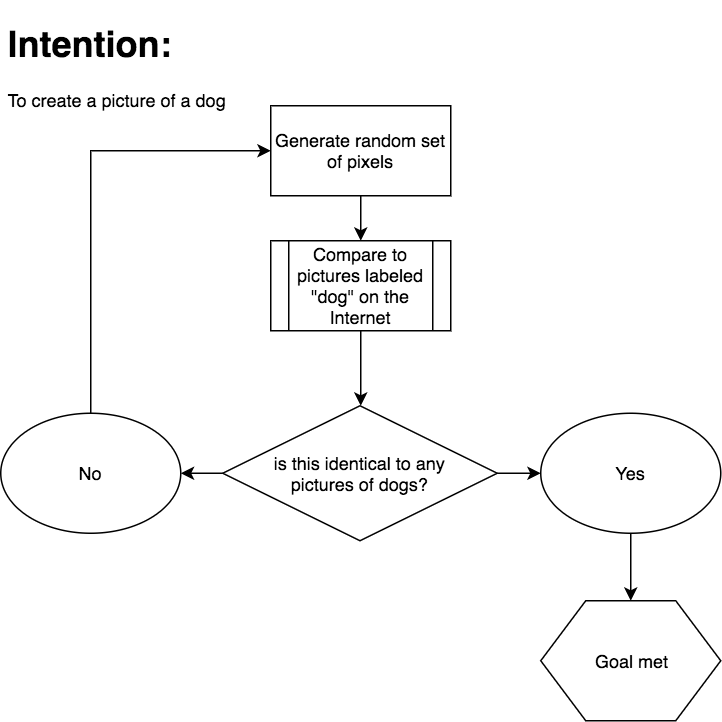

AI is limited, greatly, to the boundaries set by the programmer and AI is not good with anomalies. Here’s a common, simplified AI algorithmic concept, describing how a computer might perform a search for an image of a dog:

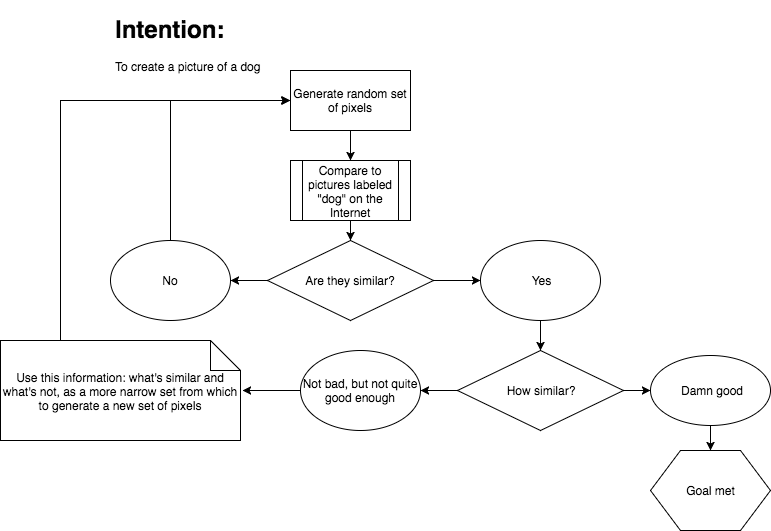

Here is another slightly smarter AI concept, this time for creating a picture of a dog:

Defining Machine Learning

Machine Learning (ML) is the ability of a computer program to learn and get smarter.

It does this using feedback, in a similar way to how a child learns; trial and error. A ML program uses “training data” to recognize patterns, and uses feedback of its outputs to improve its accuracy.

Machine Learning uses its results to adjust future efforts

Here’s a conceptual ML algorithm. The program described here rapidly generates random or slightly informed data, comparing it to all the world’s human versions. It then corrects and narrows down its generated data until it conforms to a human-defined version of what has been requested:

As it makes mistakes, it learns from them and keeps trying until it gets it right. This is also known as “adaptive machine learning”, or AML. Some people call it “Strong AI”. Here’s a great TED Talk by Blaise Agüera y Arcas of Google, where he explains a lot of how this all works:

Defining Deep Learning and Neural Networks

The earliest Neural Network was developed in 1958. In 1986, Geoffrey Hinton invented Deep Learning, and in 2012 computational power caught up far enough to prove his theory.

Essentially, a Neural Network is a way of harnessing computer power in a manner less like a machine, and more akin to the human brain. Beyond the binary of yes/no, it can learn patterns and adjacencies. This is how our algorithm can determine how close to a picture of a dog its randomly-generated pixel cluster is.

Instead of saying “is this a dog -> yes/no”, it can say “how similar is this to a picture of a dog, on a scale of 0 – ∞?”. In the AI yes/no version, it compares what it creates to correct answers as defined by human input. In the ML version, it learns by trial, error and adaptation until it gets it right.

In the real world, this is how when we search for something, we don’t have to use exact keywords and tags, and how targeted advertising knows we might want to take a vacation soon, because our recent Facebook status was “I am exhausted”. Neural Networks allow the computer to do all these things much faster, accurately and far more elegantly than before– making the technology useful, by being more efficient at certain tasks than a human.

“The main problem with the real world is that you can’t run it faster than real time.” Yann LeCun

Where we stand in 2018 (and the notion of Artificial General Intelligence)

Computers, rather than limiting their knowledge to human input, can learn, and get smarter. They can make their own assumptions beyond what inputs they have been given.

However, as most experts point out, this is a long way removed from true intelligence, or what scientists call Artificial General Intelligence (AGI). Computers have no awareness, so their capability is limited to finding and recognizing patterns. The way that computers learn, is similar to the way babies learn. Trial and error, with adjustments along the way. They can do it incredibly fast, and with almost unlimited data to work from.

However, they have no awareness of “what” it is they’re learning or doing, what it means, and what’s around it. There’s no contextual awareness. As Brian Bergstein summarized in MIT Technology Review, “Another way of saying this is that machines don’t have common sense.”

These ‘modern’ technologies are decades old

The first Chatbot was demonstrated in 1966. Now we are seeing them every day, as they have replaced many front-line customer service representatives. Autonomous vehicles, another well-known feat of modern AI, were successfully deployed through a partnership between Mercedes and the U.S. Military DARPA programme, in 1987.

Everything we are now seeing thrust into our daily lives as “new” technology based on this “disruptive” thing called AI, is between 30 to 100 years old. What took so long for this to reach us today? Funding for these breakthroughs ground to a halt because the development hit a brick wall with mass implementation. The processing power required to take these innovations to the people had not caught up, and was decades away.

Until it wasn’t.

Compute power is finally equal to the AI / ML mission

As soon as the hardware caught up to the software and the Internet finally contained enough information to provide a ready-made significant dataset, the concepts and R&D behind AI and ML were more than ready to deploy. They needed no runway, no testing, little to no development. Scientists simply dusted off their notebooks and got back to work.

It was an easy-win for investors, too, as the applications for this technology had already grown within the online ecosystem. Society was accustomed to having computers augment our daily lives, so we barely noticed when it basically just… got more clever.

“the future is already here. It’s just not evenly distributed yet.” – William Gibson

An AI is able to recognize patterns, including images, text and speech. If you give it a set of expectations and parameters, it can achieve the goal set within the exact instructions given. It has two sets of inputs to “learn” from: either a set given to it specifically by human input, or information on the Internet.

The Internet has become the data set

AI and ML can complete tasks very fast. This allows it to complete large sets of multiple tasks within a small amount of time. The more tasks it completes, the more it recognizes patterns and “learns”. The limitation of AI comes down to the simple limit of, as Gary Marcus of NYU puts it, “what can be learned in supervised learning paradigms with large datasets”. Social media has humans inputting huge amounts of raw material, hourly – providing the raw compute power with enough data points to actually get its job done.

“If a typical person can do a mental task with less than one second of thought, we can probably automate it using AI either now or in the near future.” -Andrew Ng

Putting it all together

- Artificial Intelligence refers to a set of fixed instructions, allowing for complex automation of tasks.

- Machine Learning refers to a set of instructions that requires the program to assesses its performance, detecting patterns, and retaining that information in order to “learn”, or become more accurate over time. Machine Learning’s effectiveness relies on the ability to store and access growing amounts of “training data”, but the more it is used, the “smarter” it becomes.

- Neural Networks are a non-linear type of computer logic, that is based on a model that more closely resembles the human brain’s way of processing than the yes/no models of traditional computer programs. What makes this special is that it allows for inferences and grey-areas.A computer gains the ability to know that while <beagle> is not equal to <dog>, it is similar. It knows that puppy, mutt, perro and 狗 also are strongly related to <dog>. Neural Networks allow for tools such as natural-language processing and sentiment analysis, which greatly help computers understand and appear to behave like humans.

Applying these terms to NAB announcements

From this basic explanation, you should be able to infer in layman’s terms what any new “AI”-based product announced at NAB this year is, and does. There’s a lot of search, which could be as simple as metadata tags, or as complex as text, speech or image recognition. Plenty of “computer vision”, which is where our system contains an algorithm trained to recognize faces and other objects that are commonly featured and correctly tagged on the dataset that is the Internet.

AI-based “creative” tools take the contents of the internet as training data, using ML-style algorithms to compare randomly-generated content to existing works considered by humans as “good”. For example, an AI color grading system will compare your footage to similar shots in existing video and film works, and create a look that is similar within the boundaries of color space and legal limits.

AI and ML are fantastic tools that we’re starting to see used in ways that can really change how we work. Understanding them is key to appreciating them and using them to their greatest advantage. The best part is that the more we use them, the better they get!